Hashes and data integrity

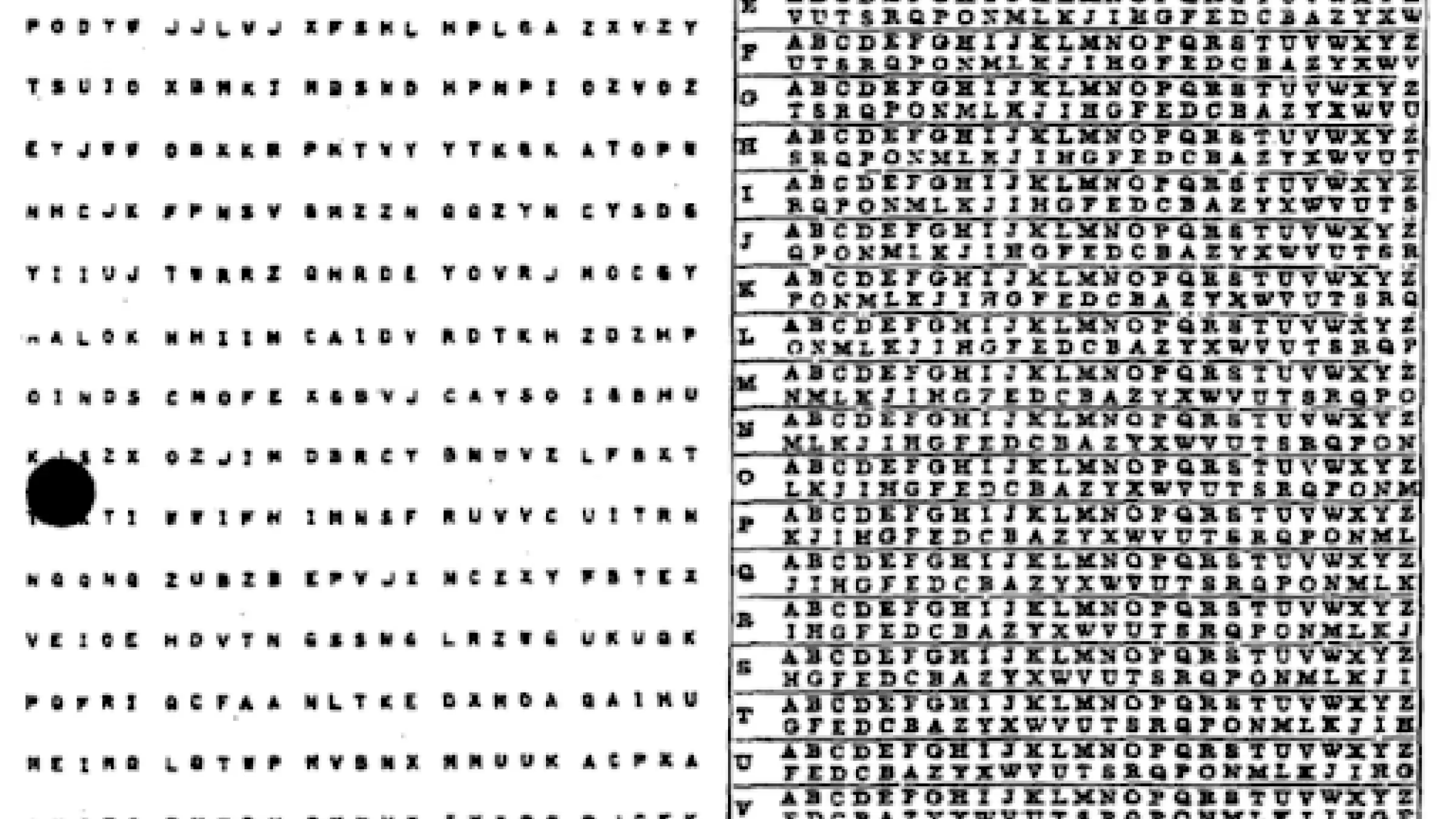

In our first blog on cryptography basics, we introduced symmetric ciphers, a basic encryption scheme using a one time pad.

While that method is sufficient for preventing message contents from being read, it doesn’t prevent interference. In our example, Eve will still be able to blindly modify the message to Bob’s non-detection. Encryption protects message confidentiality, but not necessarily integrity.

However, sometimes – such as signing a Bitcoin transaction – it is desirable to broadcast a message that is both readable by everyone and guaranteed authentic.

This post will focus on cryptographic hash functions, which play a foundational role in modern cryptography. First we’ll touch on normal hash functions, which form a fundamental part of most programming languages. Then we'll cover what the adjective “cryptographic” means when applied to hash functions, and how normal hash functions are optimized for efficiency over security.

Hash functions

A hash function takes input of any size, and produces a deterministic fixed size output. The same input always produces the same hash.

When two different inputs produce the same output, this is known as a collision. Collisions are usually undesirable but are to be expected. Good hash functions will minimize collisions and have well distributed output. After hashing many values, all of the outputs should be well distributed over the space..

Some implementations are Google's CityHash, as used in BigTable, or MurmurHash, used in Cassandra. The primary intention of these hashes is for use in building a hash table, a data structure that allows for efficient lookup of dynamic data. These hash functions are designed for use in big data systems and are heavily optimized for performance.

Most programming languages have a hashmap, which go by many different names. Examples include Python's dictionaries, JavaScript objects or Java’s explicitly stated HashMap. The idea of these implementations is to hash the key and use the result as a memory address for the data. This provides a data structure that usually provides constant time writes and reads, but not always. The occasional write will be in constant time, and in the worst case, even the reads will be O(n).

One strategy for handling collisions in a hashmap is to make a “linked list” at the colliding address. This means the hypothetical worst case performance is that of the linked list, O(n,) if an adversary were so inclined to search for these collisions. They’d need a program to search, and dedicate computer time, but in terms of engineering rigour, it is possible to happen, although not a practical concern.

Hashmaps usually manage the memory-collision tradeoff dynamically by using modulo arithmetic on the hash output. The smaller the modulo value, the less memory used, but also the more collisions. As data is inserted the implementation may decide to allocate more locations to avoid collisions, which will require an OS call to reserve more memory and move the current data. On average, hashmaps provide constant-time write and read times, but in the worst case hashmaps offer linear performance. In practice, however, these details only really matter to latency sensitive applications and coding interviews.

Cryptography requires additional requirements that regular hash functions do not provide. While a cryptographic hash function can usually replace a hash function with loss only in performance, using a hash function in place of a cryptographic hash function can lead to catastrophic failure. Regular hash functions are more efficient, but give up certain properties in exchange.

Cryptographic Hashes

Cryptographic hash functions are an essential building block to many cryptographic applications. They satisfy all of the requirements of a normal hash function while offering additional guarantees. A cryptographic hash function must provide additional properties in order for any implementation using it to be secure. If an implementation fails to uphold these promises, whether through oversight or negligence, it must be upgraded.

The additional properties a cryptographic hash needs to provide are:

- One way — Given a hash function and its output, the input must not be discoverable

- Collision resistance — Given a hash, it must be computationally impossible to find a collision. Usually called “preimage resistance” in the literature

- Avalanche effect — Changing a single bit of input must alter the output to remove correlation, so that there is no way to predict the resulting output without actually calculating the hash. While it's nice to have a well distributed hash function, it is a must for a cryptographic hash function.

Some common cryptographic hash functions are:

- SHA-2 — A family of hash functions, with SHA256 as the most common at the time of writing

- BLAKE — A more modern alternative to SHA256 that offers better performance

- MD5 — Now a broken alternative and reminder that security is a moving target. MD5 is still useful for error correction type uses, but no longer suitable for cryptographic applications

Each of these algorithms provides a mathematical black box that will uniquely fingerprint any input. The output of a hash function is usually called a hash or digest. The implementations of these algorithms are highly technical and not particularly generalizable, so we won’t mention how they work. Generally, robust implementations are offered with any major programming language.

As an example, the SHA256 digest of "hello" is

A secure cryptographic hash function provides assurance that no one is able to find another input to the hash function that will produce that particular output. The security of many digital systems rests on this assumption. In particular, this one-way fingerprinting mechanism provided by well-known implementations of secure hashing algorithms is essential to modern cryptography.

Applications

Cryptographic hash functions are widely used. To illustrate this, here are some applications they play an important role in.

Message Integrity

Hash functions can be used to protect against file tampering. Any file – be it a short message or a feature length movie – can be inputted to a hash function and uniquely fingerprinted. If a single bit of the file was changed and hashed again, the digest would change, revealing that change occurred.

For example, if Alice wanted to share a large file with Bob that she knew was uploaded somewhere, she could share the file hash along with a URL for the file. Bob could then download the file and be assured he received the information Alice intended for him without any tampering. Likewise, if Alice wanted to send a message and ensure it wasn't tampered with, she could hash her message and then encrypt the digest for a simple message authentication scheme.

A common way to protect messages is with HMAC, a symmetric message authentication scheme. The basic idea is for Alice and Bob to share a secret, similar to the one-time pad. This secret can be reused safely. The out-of-band exchange only needs to happen once, unlike one-time pads. When Alice wants to send a message, she will concatenate (join) the secret to the message and hash that, then attach the digest to the message. When Bob receives the message he will concatenate that same secret to the received message and compare the hashes. The would-be interceptor Eve is unable to know what the hash will be after the secret concatenation. Moreover, any tampering would be discovered, even if the message was in plain text and Eve knew the hashing algorithm used.

Merkle Trees

With larger files, it might be desirable to verify pieces of the file at a time, such as when distributed via P2P file networks. First the file is divided into chunks (say ~100KB to 10MB per chunk, although the size is somewhat arbitrary). Each chunk is then hashed, and the digests form leaves of a binary tree. The tree is constructed by concatenating (joining) pairs of leaves to form a parent layer with half as many nodes. This process is repeated until there is a single hash which acts as the root of the tree.

This construct is referred to as a Merkle tree, named after its inventor. Users only need to obtain a single root hash from a trusted source, and verify the rest of the Merkle tree can be obtained from anonymous peers. Once the Merkle tree is obtained, then any chunk of the file can then be verified comparing its hashed value to the expected value.

Merkle trees are a fundamental part of distributed networks that use cryptographic hash functions. If you understand them, you likely have a strong grasp on computer science. If not, don't worry too much. While Merkle trees play an important role in P2P systems and are an interesting application of cryptographic hash functions, they aren't foundational for cryptography basics covered by the rest of this series.

Bitcoin Mining

Satoshi Nakamoto observed that the only way to obtain a given hash that meets certain properties (e.g. starts with 4 all `0` bytes), is to hash many values. While finding a particular hash is entirely up to chance, finding them repeatedly will occur at a rate roughly equal to the computational power being used to calculate the hashes. With every 2016 blocks produced, Bitcoin will recalculate this value to target a 10 minute block time based on the time it took to mine the previous 2016 blocks, roughly every 2 weeks.

Armed with this observation Nakamoto was able to implement a practical solution to the Byzantine Generals problem in financial transaction ordering. In order to commit a block of transactions to the chain, a rare hash must be found. The rarity of this hash is determined by the amount of leading 0s it has. In exchange for finding the hash and committing the transactions to the public ledger, the minting address is rewarded with the transaction fees and the block reward. This is called proof of work, and is used to implement the timestamp server as described in the Bitcoin white paper. (In contrast, Polymesh uses proof of stake).

Competition for Bitcoin block rewards has created special purpose chips known as ASICs (Application Specific Integrated Circuit) that are specialized for SHA256. Despite the enormous hash power mining Bitcoin, the difficulty adjustment means the network autonomously adapts to the rapidly evolving compute environment.

Saving Passwords

Another use of hash functions is being able to verify someone else knows something, without actually knowing the information yourself. The example most people are familiar with is needing a password for online services. Although password managers often recommend that each service has a unique password, it cannot be expected that all users follow this advice. Storing user's plain text passwords along with their email will pose a considerable threat to the digital security of a significant portion of the list.

In this case, encrypting people's passwords provides only a superficial level of security, and creates a significant operational burden in managing the decryption keys. Every time the user attempts to log on, their password will need to be decrypted and then compared. Encryption is used for when the original message should be recoverable, but for passwords, we only care if the user entered the same password as when they made their account.

When storing passwords, it is much better to first hash the password and store the digest. Then when the user logs on, repeat the process and compare equality between the digests. Given the sheer ability for an attacker to perform hashes, and the fact that passwords do not follow a random distribution, a specialized scheme should be used. Storing passwords safely generally needs a system in which a cryptographic hash function plays an essential role. Two examples are the classic Bcrypt or the newer Argon2.

Hashing passwords is a commonly accepted practice. Ideally, only trusted people are able to access the database where they are stored. Hashed passwords are commonly used to provide protection against threats of a data breach or disgruntled employees. Plain text password storage is a major red flag in a security audit. Knowledge of the technique to cryptographic hashes is a prerequisite for handling user passwords responsibly.

The size of 2255

The above applications rely on the absence of collisions being highly unlikely. Despite computers being faster than we can imagine and continually improving, the mathematical space of 2255 is so vast that all of the world's computing power can only search a small fraction of it. The number of possibilities contained within 256 (NB: Anything raised to zero equals 1, 20 = 1) for "yes" or "no" questions is mind boggling, especially since 256 characters isn’t really that big. For example the binary representation of a SHA256 hash is only a few lines:

Even if every Bitcoin miner decided to devote their hardware solely to finding a collision, it would take many billions of years before they'd be expected to find another value with this output. Despite how powerful modern computers are, the number of possibilities contained in 256 bits far exceed their capabilities. Trying to visualize how many possibilities is impossible, but can help develop a sense for just how secure 256 bits of security really is.

Additional Resources

This 3blue1brown episode provides a powerful illustration about how many possibilities exist in 2255.

This site walks you through SHA256 implementation step by step.

The README for Google’s CityHash also has many threads to pull if you’re interested in learning more about the nitty gritty of hashes.

The next instalment by Polymesh developer Eric Richardson will introduce asymmetric cryptography, which will demonstrate how Alice and Bob can communicate securely without needing to exchange any secrets ahead of time.

The Polymesh Developer Portal is contains comprehensive documentation, specialized user journeys, and hands-on exercises for building on the purpose-built Polymesh network.